The growing sophistication of large language models (LLMs) has shifted AI systems from reactive assistants to proactive, decision-making agents. These agents can reason, plan, and execute tasks across multiple systems, opening new possibilities in process automation, knowledge management, and digital operations.

Microsoft’s AI Agent Fundamentals learning module provides a foundation for building such agents using Azure AI Foundry. However, developing an enterprise-grade agent involves far more than configuring a model. It requires careful architectural decisions around autonomy, orchestration, safety, and cost management.

This article unpacks both the fundamentals and the nuances of how to build an AI agent on Azure, exploring tradeoffs, design considerations, and pitfalls often overlooked in introductory materials.

What makes an AI agent different from a chatbot

A chatbot is designed to answer.

An agent is designed to act.

While conversational systems rely on pattern recognition and contextual recall, AI agents operate autonomously, pursuing defined goals through reasoning, planning, and tool use.

Azure’s definition centers on five components:

These elements together make the system “agentic”, capable not only of responding, but of deciding what to do next. Azure’s managed runtime wraps these into a cohesive environment that simplifies deployment and compliance.

Inside Azure AI Foundry: the foundation for agent development

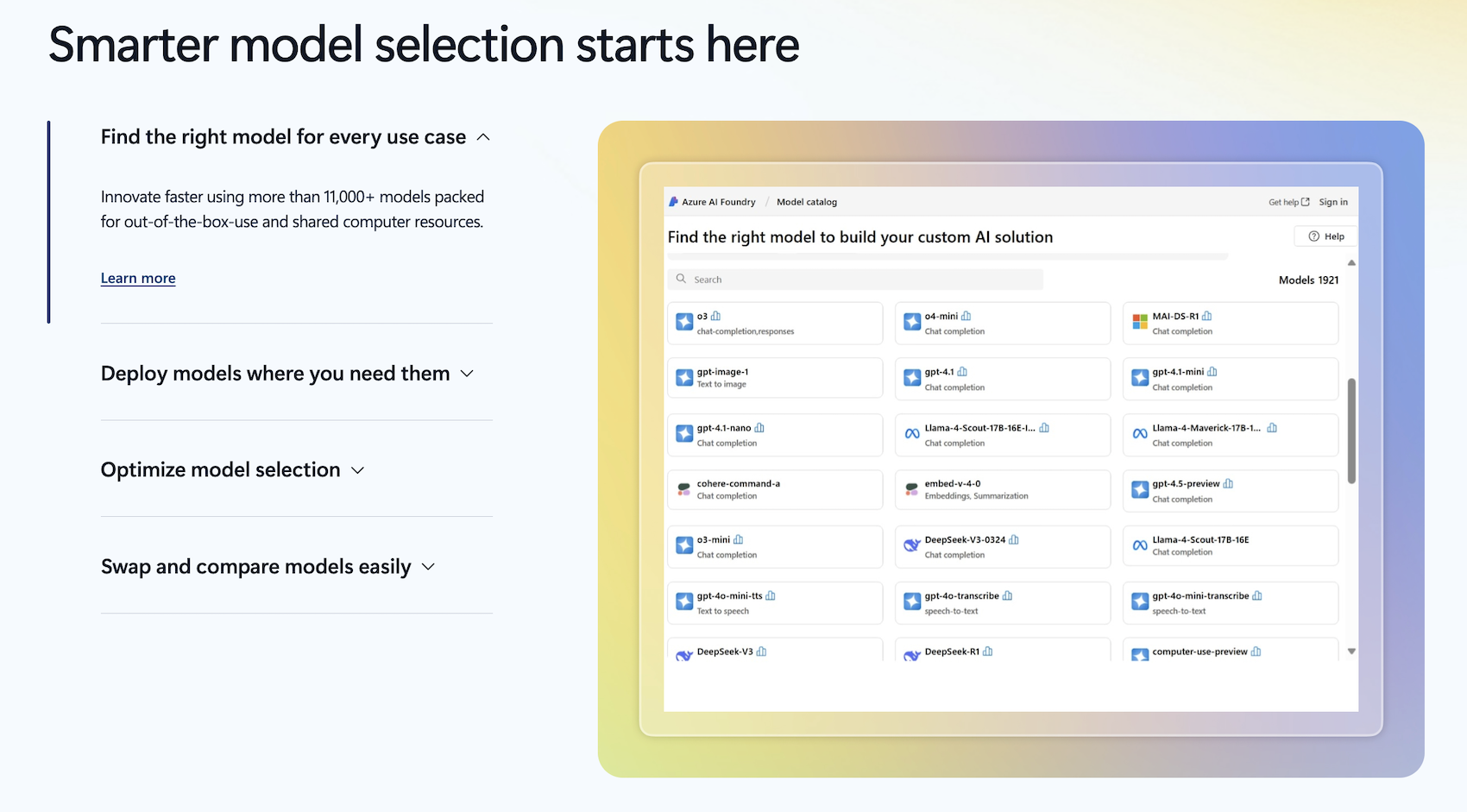

Azure AI Foundry serves as the central hub for developing and operating AI agents. It combines model access, orchestration services, governance, and observability within a unified environment.

The Agent Service

The Agent Service within Azure AI Foundry acts as a managed runtime. It:

- Integrates model, instructions, tools, and policies into one logical unit.

- Handles orchestration, state persistence, retries, and tool invocation.

- Provides enterprise-grade features: RBAC, encryption, VNet isolation, and telemetry.

Developers can work entirely through the Azure AI Foundry portal or integrate via SDKs (.NET, Python, JavaScript). The service abstracts away infrastructure, allowing teams to focus on agent logic rather than deployment complexity.

The quickstart flow

The Learn module introduces a practical workflow that includes:

- Create an AI Foundry project in your Azure subscription.

- Deploy a base model (at the end of 2025, typically GPT-4o) to the project.

- Define the agent specifying instructions, expected behaviors, and available tools.

- Test the agent in the Agent Playground to simulate tasks and responses.

- Expose the agent endpoint and call it programmatically through Azure SDKs or REST API.

Behind the scenes, the Foundry project manages dependencies, authentication, and resource allocation automatically.

Building blocks of an AI agent

While the Azure UI streamlines configuration, the conceptual underpinnings remain critical.

Defining the agent’s role and autonomy

The first design decision is the scope of autonomy. Will your agent simply assist with document retrieval, or will it execute operations across systems?

- Narrow-scope agents (e.g., summarization, translation) are deterministic, cheaper, and easier to test.

- Goal-oriented agents require broader access to data, dynamic planning, and error recovery mechanisms.

Defining autonomy early determines the level of governance and tool access the agent requires.

Tooling and grounding

Agents are only as useful as the tools they can call. Tools provide structured access to actions and data sources: REST APIs, Azure Functions, or MCP (Model Context Protocol) servers.

Key design decisions include:

- Data grounding: Should the agent use retrieval-augmented generation (RAG) for accuracy?

- Tool safety: How are input/output schemas validated to prevent injection or misuse?

- Performance: Each tool invocation adds latency and cost. Caching and batching help optimize.

Azure allows developers to register custom tools and specify schema constraints that define how agents invoke them.

State management

Agents require memory: short-term (conversation context) and long-term (session history). On Azure, this typically relies on Cosmos DB or Azure Blob Storage for persistent state.

- Stateless agents: Lower cost, but less context continuity.

- Stateful agents: Better reasoning and multi-turn logic but introduce storage, versioning, and privacy concerns.

Careful schema design and retention policy definition are essential for compliance.

Multi-agent architectures: delegation and orchestration

In complex environments, it’s inefficient for a single agent to handle all logic. Azure introduces connected agents, an orchestration model that lets a “main” agent delegate tasks to specialized sub-agents.

Benefits

Whilst humanity is just scratching the surface of the process, its benefits and pitfalls, these advantages of using multi-agent architectures are obvious at this early stage of AI adoption:

- Modularity: Each sub-agent has a focused domain (e.g., compliance checking, summarization).

- Scalability: Teams can iterate or redeploy one agent without affecting the whole system.

- Maintainability: Logs and telemetry are scoped per agent, easing debugging.

Pitfalls

However, connected agents introduce orchestration uncertainty:

- The routing logic is model-driven, meaning misclassification or over-delegation can occur.

- Circular dependencies may arise if agents are allowed to invoke each other without boundaries.

- Latency compounds as multiple agents exchange reasoning chains.

AI agent development teams can mitigate this by defining explicit task boundaries, timeout policies, and fallback behavior.

Governance, observability, and safety in AI agent development

When deploying autonomous systems in production, governance is non-negotiable. Azure addresses this through built-in observability and policy enforcement layers.

Monitoring and telemetry

Every agent’s execution can be traced through Application Insights and OpenTelemetry integration. Logs capture tool calls, responses, reasoning steps, and potential policy violations.

Granular observability enables both debugging and compliance auditing, critical for regulated industries such as healthcare or finance.

Content safety

Azure applies layered safeguards:

- Input sanitization and content filters to block injection or unsafe outputs.

- Rate limits and access controls via Azure Entra ID and RBAC.

- Network isolation for agents operating within private VNets.

Still, developers should treat these as foundational, not complete. Prompt injection, privilege escalation through API chaining, and model hallucination remain active risks. Defensive prompt engineering and manual tool whitelisting are advisable.

Design tradeoffs and engineering decisions

Every AI agent involves a series of technical tradeoffs. Below are the most consequential ones.

For enterprise deployments, the optimal balance depends on regulatory constraints, data sensitivity, and expected ROI.

A common pattern is to start narrow, one agent, one task, limited tools, and expand iteratively as governance and testing frameworks mature.

Pitfalls often overlooked in early-stage AI development projects

Early-stage AI agent development projects don't need to commit every mistake in the book, so we have put up a quick compilation of major process oversights, so our reader avoids the avoidable.

Latency stacking

Each reasoning loop or tool invocation compounds latency. Even minor inefficiencies in multi-agent systems can push total response time beyond acceptable thresholds. Solutions include:

- Caching previous results.

- Using lightweight reasoning agents for routing.

- Limiting recursion depth in delegation.

Cost unpredictability

Azure pricing depends on model size, token count, and tool invocation frequency. Without guardrails, dynamic prompts or extended reasoning loops can quickly inflate costs. Logging token usage per session is critical to forecast and control expenses.

Debugging opacity

Agentic workflows involve implicit reasoning steps not always visible in logs. Inadequate tracing can make root-cause analysis difficult. Always enable structured reasoning logs and correlate them with tool activity. Azure AI Foundry includes built-in tracing and OpenTelemetry integration, allowing teams to visualize each agent run, tool call, and reasoning chain within Application Insights. This makes debugging less opaque, developers can inspect spans, latency, and error paths directly, rather than inferring them from raw logs.

Version drift

As AI development experts update prompts or policies, behavior can shift subtly. Version every instruction set, prompt, and model pairing to maintain reproducibility across environments.

Comparison: Azure-managed agents vs open frameworks

AI developers familiar with frameworks like LangChain, AutoGen, or Semantic Kernel may wonder how Azure’s approach compares.

For organizations operating under compliance regimes or preferring turnkey observability, Azure AI Foundry offers a faster and safer path.

For experimental teams, open frameworks remain valuable for testing novel architectures or reinforcement learning techniques.

Best practices for production-grade Azure agents

Microsoft’s module introduces the basics, but operationalizing AI agents requires additional engineering rigor:

- Design prompts as code. Store prompt versions in source control with semantic diffs.

- Implement robust fallback logic. Define what happens when a tool fails or returns invalid data.

- Apply zero-trust principles. Treat every tool invocation as potentially unsafe; validate inputs and outputs.

- Monitor tokens and reasoning depth. Prevent runaway reasoning loops.

- Instrument the agent early. Enable telemetry before scaling; retrofitting logs later is costly.

- Document tool contracts. Each tool should have a defined schema and failure policy.

- Test with adversarial prompts. Identify weak points before production exposure.

Following these practices turns experimentation into sustainable automation.

For enterprise-grade agentic development, the below best practices are also of value:

Identity and security management

- Use Managed Identities instead of API keys for tool and data access.

- Apply least-privilege RBAC roles to minimize lateral access.

- Route external API calls through Azure API Management to enforce rate limits and audit usage.

Data retention and compliance

- Define explicit data retention policies for stateful agents (Cosmos DB or Blob).

- Redact sensitive inputs before persisting logs or reasoning traces.

- Regularly review which tools and datasets each agent can access.

For those looking for a more nuanced commentary, this is a detailed walk-through of the best practices of AI agent development on Azure.

The future of AI agents on Azure

Azure’s roadmap suggests a shift toward interoperable, discoverable agents. Emerging standards like the Agent-to-Agent (A2A) protocol and Model Context Protocol (MCP) will allow agents to dynamically discover and communicate with one another, similar to APIs on the web.

Additionally, research directions like reinforcement learning for agent behavior optimization (e.g., Agent Lightning, Cognitive Kernel-Pro) indicate that future iterations will move from static prompts toward adaptive, self-improving policies.

As these capabilities mature, the focus will likely move from building individual agents to governing agent ecosystems, ensuring safe collaboration across heterogeneous environments.

Conclusion

Building an AI agent on Azure starts as a simple configuration exercise, but scaling it into production requires architectural discipline.

Azure AI Foundry streamlines orchestration, observability, and governance, giving teams an enterprise-ready framework. Yet the hardest part remains conceptual: defining autonomy boundaries, managing tool access, and preventing cascading errors in reasoning.

By understanding these tradeoffs and applying structured design decisions early, developers can leverage Azure’s ecosystem to create intelligent, compliant, and cost-efficient AI agents ready for real-world workloads.

Only starting your AI adoption journey? Book a free AI strategy consulting session to get a better understanding of your path forward.