AI use case prioritization as a fundamental success factor

AI adoption programs succeed when the organization makes disciplined and calculated choices. That discipline starts with deciding which problems to tackle first and why. Most teams are flush with ideas - personalization here, demand forecasting there, an ambitious automation initiative somewhere else - yet capacity, data, and change bandwidth are finite.

Case prioritization turns a long wish list into a sequence of moves that compound: early wins pay for later bets, and each project lays groundwork (data pipelines, evaluation methods, governance) for the next.

A good selection process creates shared context. Stakeholders see the scoring criteria, understand trade-offs, and can track why a particular case moved ahead of another. That transparency shortens meetings and reduces the back-and-forth that derails timelines. It also surfaces constraints early. If a use case depends on customer event data that isn’t captured reliably, it moves to a later wave with an explicit data plan rather than consuming months of effort before anyone notices the gap.

The practical outcome is momentum. When a team regularly converts ideas into deployed capabilities with measurable benefits, confidence rises, budgets unlock, and adjacent business units begin to participate. Prioritization is the habit that produces that momentum.

Why use case prioritization makes or breaks AI adoption

AI use case prioritization is about what to do, in what order, and why. It is the bridge between ideas and outcomes. Done well, it focuses scarce time, budget, and data on the few initiatives that actually move the needle. Done poorly, it produces prototypes that never leave the lab.

Most AI initiatives fail for predictable reasons: the problem is poorly scoped, the data is unavailable or unfit, the value is unproven, or the solution is hard to operate at scale. Prioritization is how you surface those constraints early and choose battles you can win.

A solid prioritization process creates three benefits:

- Clarity - Everyone sees the same scoring model and the trade-offs behind decisions.

- Momentum - You sequence quick wins first to fund and de-risk the rest.

- Defensibility - You can explain to a CFO or regulator why a use case is first, second, or parked.

Before we start sorting out the most feasible use cases, it’s critical that we first gather an ample collection of candidates. Below are some of the AI adoption best practices for this early part of the process.

How to build a high-quality AI use case candidate list

Before scoring, you need a thoughtful pool of candidates. The way you compile that list should reflect the reality of your organization - size, data maturity, and IT support - and the shape of your business.

Start with your profile

If you’re a large enterprise with several data sources and a staffed IT/MLOps function, you can cast a wider net: include multi-system initiatives (e.g., cross-channel churn prevention) and cases that yield reusable assets (e.g., a feature store or a customer identity graph).

If you’re a smaller company or operating with limited IT support, focus on narrow, API-ready solutions where integration is straightforward and the first value appears quickly (think demand forecasts at an SKU-region level, NLP triage for support tickets, or route optimization delivered as a managed service).

Consider data availability early

Where you have stable, accessible data - sales ledgers, support transcripts, telemetry, claims histories - you can nominate cases for an early wave.

Where data is fragmented or gated, capture the idea but pair it with a specific data action (instrumentation, contracts, labeling). This prevents “wish-casting” and keeps the backlog honest.

Organize by department and outcome

Walk each function - Marketing, Sales, Operations, Finance, HR, Customer Experience - and ask the same questions:

- What outcome would change your quarter?

- What manual process burns time every week?

- Where do you need earlier warning or better routing?

- Where do you have a load of data but minimal insights?

- Which AI-powered initiatives are in place with competitors in this realm?

Tie each idea to a business goal (grow revenue, reduce cost, mitigate risk, improve experience) and a measurable north star (conversion lift, cycle-time reduction, error rate, dollar savings). The conversation becomes concrete and comparable across teams.

Favor candidates with simple paths to production

When two ideas look similar on impact, prefer the one that can be deployed as an API against a well-defined interface, or the one that slots into an existing workflow with minimal change management.

Early delivery creates credibility, even if the model is modest. You can iterate to sophistication once the loop from “model” to “business metric” is functioning.

Example flow to assemble the list

In week one, a facilitator runs short interviews with department heads to capture pains and goals and checks data owners for availability signals.

In week two, the team distills everything into short, consistent cards: problem statement, business owner, affected KPIs, primary data, expected users, and how it would run in production (human-in-the-loop, batch report, real-time API).

This yields a cross-functional slate that’s ready for scoring rather than a brainstorm of slogans.

A practical framework for prioritizing AI use cases

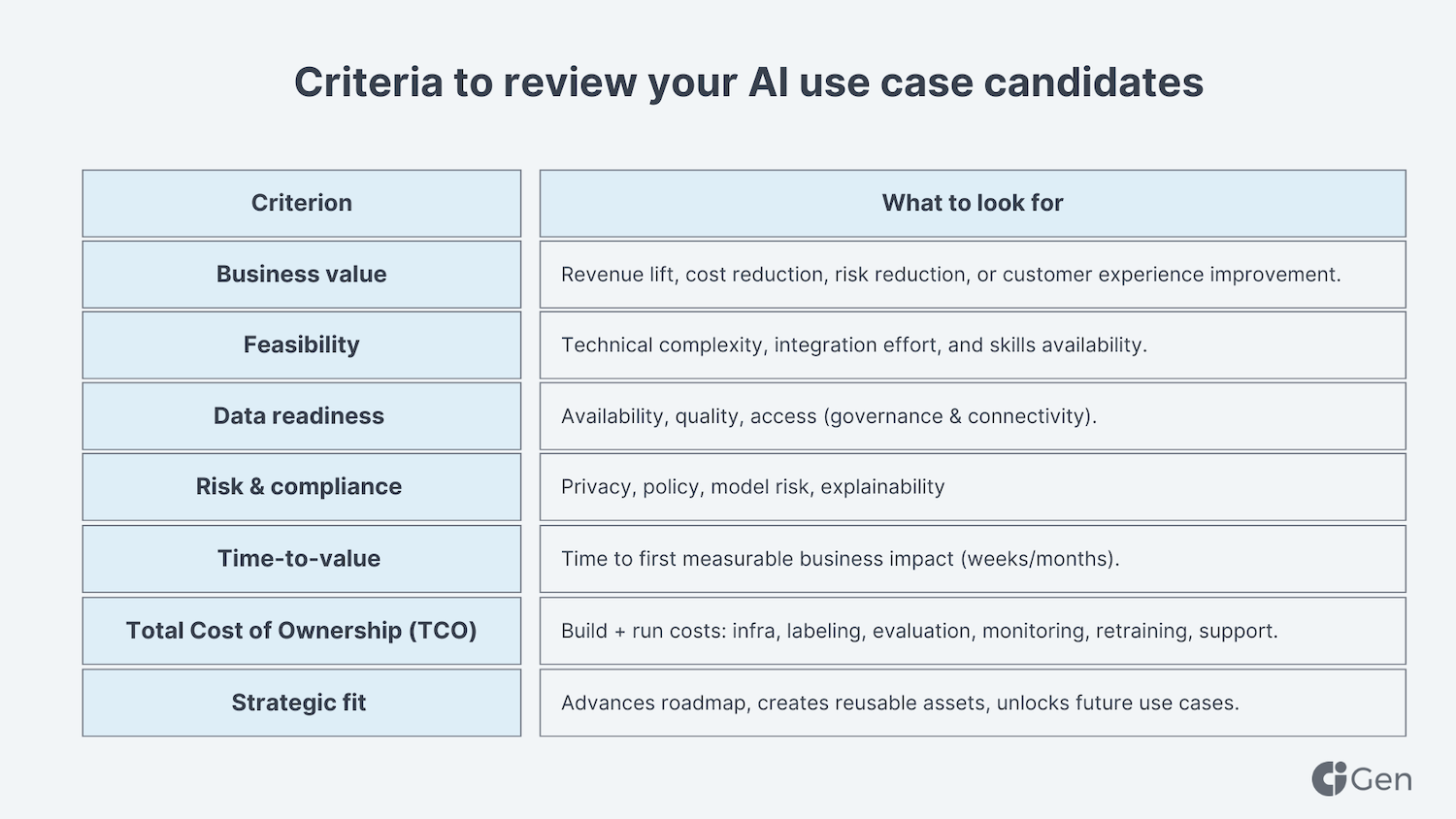

Once you have a candidate slate, you need a simple model that the organization can support. The core idea is to evaluate both value and feasibility, then adjust for risk, time, cost, and strategic considerations.

Begin with value: revenue lift, cost reduction, risk reduction, or a clear customer experience improvement. Value should be tied to the mechanics of your business - pricing, conversion, inventory turns, claim leakage - so the effect can be measured. Then consider feasibility as it will be experienced in delivery: model maturity for the task, integration surfaces, non-functional requirements such as latency or throughput, and the skills on hand to build and operate the solution.

Extend the view with the factors that often decide real-world success.

- Data readiness asks whether the required data exists, is lawful to use, and is accessible in practice.

- Risk and compliance reflect privacy, safety, fairness expectations, and the governance effort required.

- Time-to-value converts ambition into sequencing: faster items fund the portfolio and teach the organization how to land change.

- Cost should reflect build and run - labeling, evaluation, infrastructure, monitoring, retraining - so that a low development cost does not hide expensive operations.

Tie the framework together with weighting. Weights are where strategy lives: a quarter that prizes speed might elevate feasibility and time-to-value; a regulated environment might give compliance more influence; a margin-pressured year might penalize cost more heavily. Keep the model consistent in direction - if a column is a “goodness” score (higher means better), it should carry a positive weight; if it measures “badness” (higher means worse), either invert it or apply a negative weight. Document the rationale so future readers understand why this quarter emphasized one dimension over another.

Scoring dimensions and how to calibrate them

Calibration turns the framework into a working instrument. The goal is to make a 3 in Marketing comparable to a 3 in Operations so the portfolio is coherent.

Business value benefits from tangible baselines. For savings, anchor on current cycle time, error rates, or staffing hours and describe a plausible improvement range. For revenue, connect the dots from the model to a lever - more qualified traffic, higher attach rates, fewer cancellations - and describe how the effect reaches P&L. For risk reduction, quantify avoided incidents or expected loss.

Feasibility should blend algorithmic difficulty with systems reality. A straightforward classifier might be hard if it requires sub-second decisions in a chain of services without clear ownership. Map integration points, latency expectations, and where the decision will be consumed. Include skills and vendor posture - can your team operate this at 2 a.m., and will the vendor support the load you expect?

Data readiness is best decomposed into availability, quality, and access. Availability asks whether the data exists over a useful time horizon. Quality asks whether it is complete, consistent, labeled, and representative. Access covers both governance and plumbing: legal basis, consent posture, retention policy, and actual connectivity. If a use case scores low here but ranks high on value, that is a cue to pair it with a timed data initiative rather than to abandon it.

Risk and compliance benefit from explicit criteria: privacy exposure, safety and abuse vectors, explainability needs, and model risk controls. Decide whether you prefer to see a “goodness” score (safer equals higher) or a “badness” measure (riskier equals higher). Match the weight sign accordingly so the math reinforces the policy.

Time-to-value and cost translate ambition into delivery habits. Time should represent the span to first measurable business impact, not only to a demo. Cost should consider the full lifecycle: building, deploying, evaluating, retraining, and supporting. If the portfolio is dominated by long timelines, inject a shorter item to maintain organizational energy; if the portfolio is all quick wins, add at least one capability builder that creates reusable infrastructure or governance.

Strategic fit often resolves ties. Ask whether the project creates assets others can reuse: cleaned data sets, shared features, reusable prompts, evaluation harnesses, or policy patterns. A short project that unlocks five more projects is greater than its individual score.

Finally, keep scoring human. A quiet note field beside each numeric score helps future readers understand the reason for a “2” or a “5” and reduces drift when new participants join the process. Revisit rubrics on a cadence - quarterly is common - so the instrument stays tuned to your operating context.

Running a prioritization sprint for potential use cases for AI adoption program

Treat prioritization as a short, focused sprint rather than a rolling conversation. Two to four hours is enough if the prep is tight. Before the session, collect a small packet for each candidate: a one-line problem statement, the business owner, the data sources involved, the people or systems that will consume the output, and any constraints you already know about - regulatory, brand, capacity, vendor lock-ins. If you have baseline metrics or rough cost assumptions, include them; even imperfect numbers sharpen the discussion.

Open the session by agreeing on the outcome: a ranked list for Now / Next / Later and a record of why. Confirm the weights and the scoring rubric so you don’t end up disputing definitions later. Then move through the candidates in a steady cadence. A quick evidence check comes first - do we have the data, can we integrate it, are there policy flags? - followed by silent scoring. The silence matters; it reduces anchoring and gives quieter voices space. When scores are in, discuss outliers rather than re-litigating every number. Where people disagree, ask for the one piece of evidence that would change the score and note it as a follow-up.

By the last half hour, shift from numbers to decisions. Sort the table, pressure-test the top few against real constraints, and confirm owners and dates. Leave with three tangible artifacts: the ranked list (including any tie-break notes), the risks and dependencies attached to each top case, and a short decision log that explains what moved forward, what moved to later, and why. Those notes save weeks of rework when leadership or compliance revisits the topic.

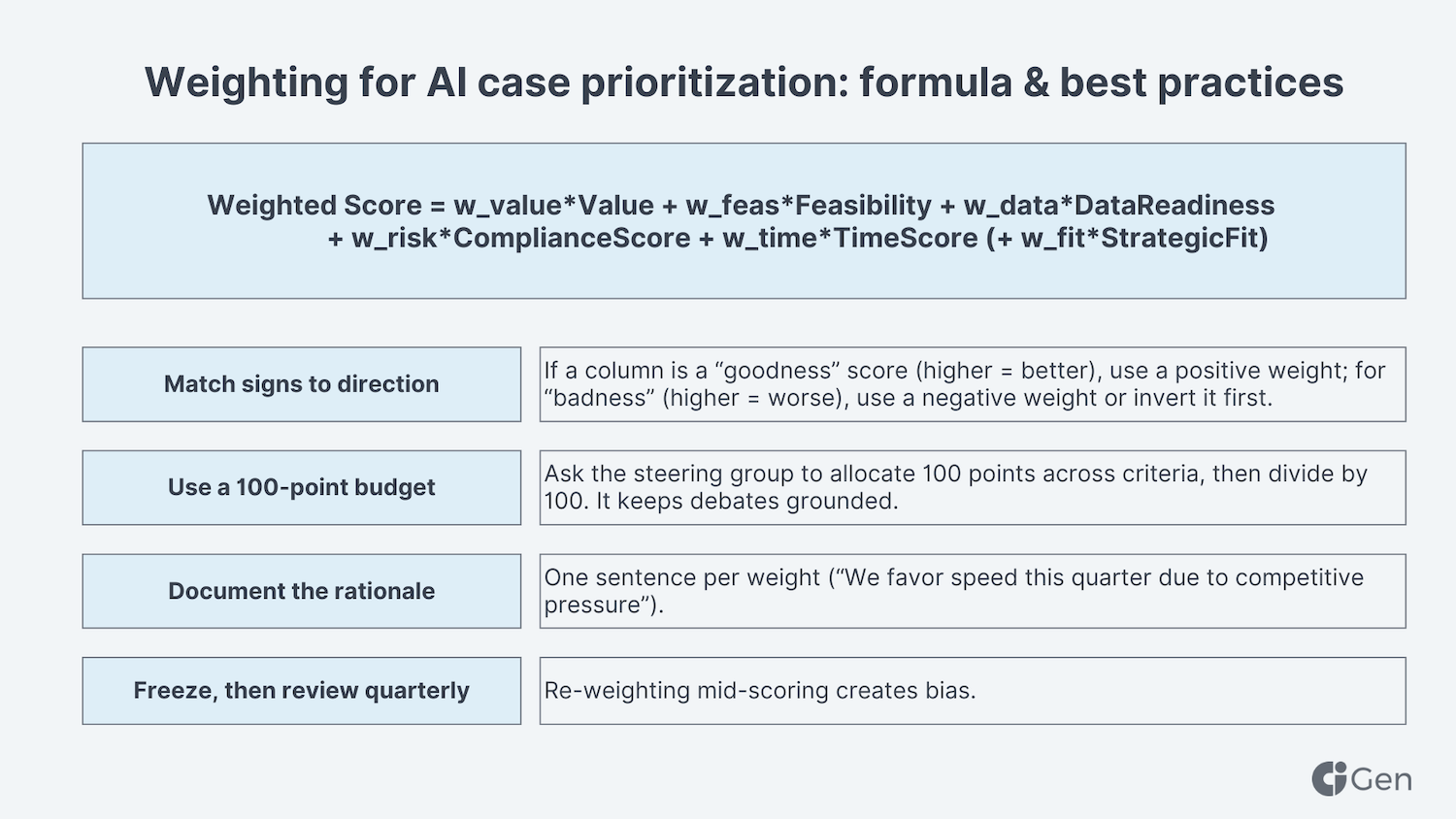

Weighting: aligning the model to strategy

A weighted score is a way to define your high-level priorities in numbers. The formula looks technical:

Weighted = w_value·Value + w_feas·Feasibility + w_data·Data + w_risk·Compliance + w_time·Time (+ w_fit·StrategicFit)

yet the purpose is simple: give more influence to what matters this quarter and less to what can wait.

The most important rule is consistency. If a column represents “goodness” (higher means better), its weight should be positive. If a column represents “badness” (higher means worse), either flip the column into a goodness score or use a negative weight. That one detail prevents the head-scratching moment where a safer, faster option mysteriously drops in the ranking.

Set weights with a lightweight exercise rather than a debate. Give the steering group a 100-point budget and ask them to allocate those points across the criteria. Dividing by 100 turns those points into weights. You’ll learn a lot from the conversation: a sales-driven organization will favor value and speed, a heavily regulated one will emphasize compliance and feasibility, and a bootstrapped team will penalize cost more aggressively.

Capture the rationale in a sentence beside the weights - “We’re emphasizing time-to-value due to a seasonal window” or “Compliance has elevated importance during our audit.” That note becomes the anchor when new ideas arrive next week.

Once set, freeze the weights for the scoring round. Re-tuning midway introduces bias because the weights begin to chase the ideas. A quarterly review is usually enough to keep them aligned with market realities and internal capacity.

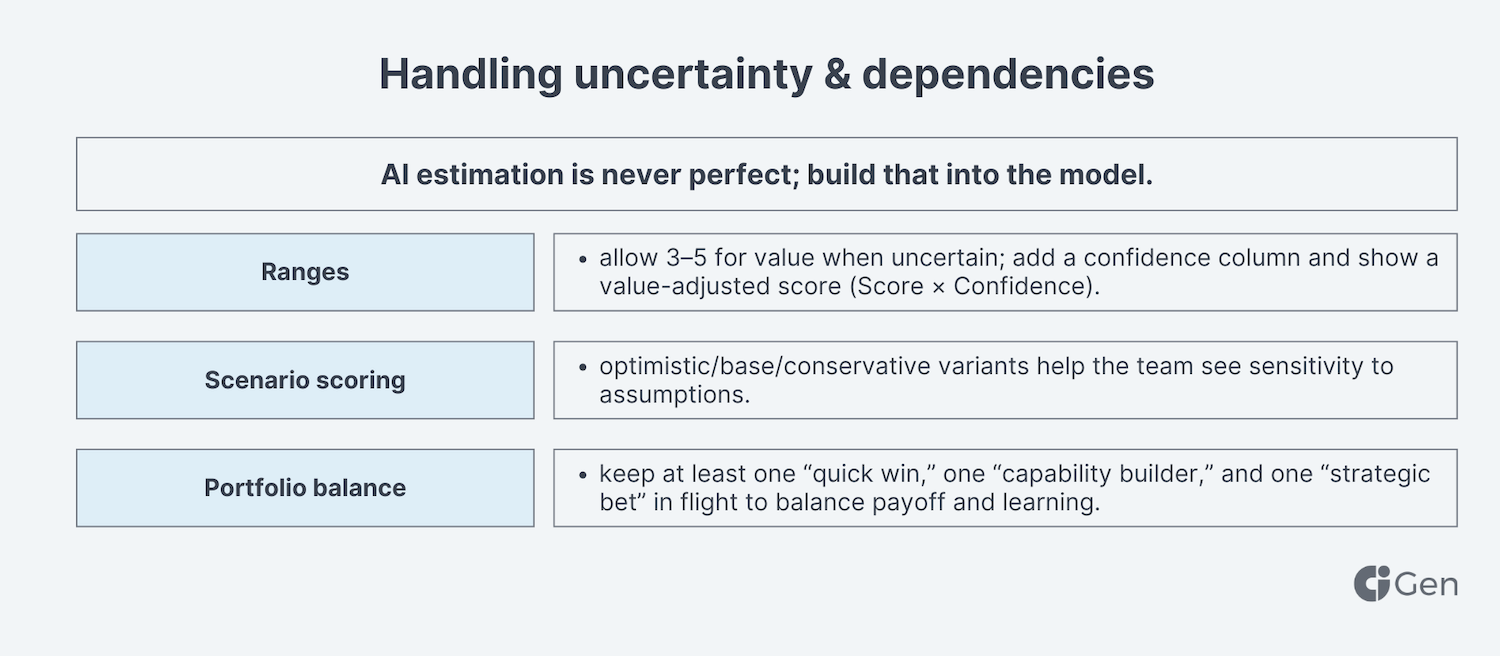

Handling uncertainty and dependencies while prioritizing cases for an AI adoption plan

Every score carries some uncertainty. Make space for that rather than pretending it’s precise. A simple way is to allow ranges during scoring - “value is 3–5” - and to record a confidence level. If two cases tie, the one with higher confidence usually deserves the earlier slot, because it’s more likely to land.

Scenario scoring is another useful lens. Create optimistic, base, and conservative views for a few pivotal cases and see how sensitive the ranking is. If a candidate swings wildly with small assumption changes, treat it as a later-wave option or pair it with a short discovery task to buy down the risk.

Finally, balance the portfolio deliberately. Aim to have one quick win that returns value within weeks, one capability builder that upgrades your data or evaluation muscles, and one strategic bet that aligns to longer-term differentiation. That mix keeps momentum high while building foundations you’ll use again.

From scores to a thought-through AI roadmap

Scores are decision support. Turning them into a plan is where adoption happens. Start with gates: define minimum thresholds for data readiness and compliance before a build begins. This avoids stalled projects that looked exciting on paper but can’t get over the basics.

For items that do pass the gates, write down the conditions under which they continue. Clear exit criteria for a PoC - target lift, error reduction, cycle-time improvement, or a ceiling on operational cost - make the next decision mechanical rather than political. If the pilot clears the bar, it earns investment; if not, it yields learning and you move on.

A smooth handoff into delivery matters as much as model quality. Attach a short checklist to each green-lit item: how it will be monitored, how often it will be retrained, how incidents will be handled, and who owns the change management on the business side. Close by naming the business KPIs that will move in production and the person who reports them. When ownership is explicit, the project survives the calendar.

If you keep this rhythm - clarify what you value through weights, score with evidence, acknowledge uncertainty, and commit to gates and owners - the portfolio becomes easier to steer. You get fewer heroic rescues and more steady deployment, and each project makes the next one faster.

Anti-patterns to avoid (and how to overcome them)

Novelty bias

Shiny demos are persuasive. A proof-of-concept that writes product copy or chats with policies can easily crowd out a quieter use case that would lift conversion or shorten cycle time. A quick test helps: if the business owner can’t name the KPI that will move, you’re evaluating a demo, not a use case. Shift the conversation to outcomes - “What number changes?” - and compare candidates on that yardstick. Keep a small “exploration” budget for cutting-edge ideas, but protect the delivery track for work tied to revenue, cost, or risk.

Data wish-casting

Teams often assume “we’ll get the data once we start,” only to discover missing history, spotty labels, or legal barriers. Treat data as a requirement, not a hope. During intake, write a mini data plan on the candidate card: sources, time span, access owner, governance notes, and what must be instrumented or labeled. If the plan is non-trivial, pair the use case with a time-boxed data task and rescore once it lands. This keeps momentum without pretending the gap isn’t there.

Static weights

Strategy shifts with markets, seasons, and audits. If weights never change, your scoring model slowly stops reflecting what leadership actually values. Revisit weights on a steady cadence (quarterly works for most). Capture the rationale in one line beside each weight - “Speed emphasized ahead of holiday peak,” “Compliance raised during model risk review.” When a new idea appears mid-quarter, use the frozen weights; when the quarter turns, adjust and communicate.

One-and-done PoCs

Pilots that have no clear path to production chew up talent and trust. Before you green-light a PoC, write down what happens if it works: which system it feeds, who owns it, how it will be monitored, and which KPI it must move. Add a simple go/stop rule - target lift, error reduction, cycle-time improvement, or run-cost ceiling. When the pilot clears that bar, it earns investment automatically; when it misses, it yields learning and you move on without post-hoc debates.

Hidden operations

Models come with ongoing work: labeling, evaluation, monitoring, retraining, incident handling, and user support. If you treat these as afterthoughts, total cost and risk creep up. Make operations visible from day one. In scoping, include an estimate for labeling effort, an evaluation plan (with real-world slices, not just overall metrics), thresholds for drift, a retraining cadence, and an incident playbook. Name the owner for each. When those pieces are present on the spec, surprises are rare and handoffs are cleaner.

A quick filter that helps mitigate most of the above

At the end of each prioritization sprint, you can also add three lines to the decision log for every top-ranked case:

- the KPI that will move and how you’ll measure it;

- the data plan (what exists, what’s missing, who owns access);

- the ops plan (monitoring, retraining, support, and ownership).

If any of the three lines is vague, the item isn’t ready for production just yet. Park it in the later stages with the missing action spelled out and a date. This simple discipline protects your roadmap from hype, keeps the team honest about data and effort, and ensures that good prototypes become running services that deliver value.

AI case prioritization template - instant download

This workbook guides you from quick triage to deeper planning across three tabs: Starter, Growth, and Enterprise, so you can match the scoring effort to the complexity of the project.

Each tab is prefilled with dummy data to show how the scoring works. In every tab, grey-shaded cells are formula-driven and should be left as they are; they calculate scores, ranks, and charts automatically. White cells are for you to edit: replace the placeholder titles, problems, owners, categories, data sources, and numeric inputs with your own.

On Growth, adjust the weights in cells F2:I2 (ROI, Strategic fit, Data readiness, Cost) to reflect your priorities; the Weighted score and Rank (columns J–K) update instantly. Note that “Implementation cost” here is scored 1–5 where 5 = cheapest.

On Enterprise, enter Annual benefit, 3-year TCO, Feasibility (1–5), Compliance risk (1–5), and Time-to-value (months); the sheet computes ROI%, converts feasibility/compliance/time into 0–1 scores, and applies the weights in H2:K2 to produce a single Weighted score and a Rank.

The Dashboard reads directly from the Growth and Enterprise tabs and will update as you change inputs and weights.

Keep the sheet as a living decision log, not only a calculator. When you edit scores or weights, jot a one-line reason beside the change and review the settings on a regular cadence. That small habit makes your selections easier to explain and your roadmap easier to maintain.

More AI adoption strategy templates? We got you

AI case prioritization algorithm turns AI adoption process from a list of ideas into a working plan with clear outcomes, owners, and guardrails. When you calibrate the criteria, set sensible weights, and run short, evidence-based sprints, the portfolio becomes easier to steer and each delivery lays groundwork for the next. Treat this template as a living record of decisions: update scores when facts change, review the weights on a cadence, and keep the notes beside each choice so stakeholders can follow the reasoning.

We’ll continue to release practical tools like checklists, scoring presets, workshop agendas, and roadmap templates to help future-forward businesses make the AI-strategy-to-execution path faster and more transparent.