Enterprises today generate and manage data across numerous systems: on-premises databases, SaaS platforms, IoT devices, and multi-cloud applications. Integrating this information into a unified view is essential for decision-making, analytics, and automation. However, traditional data integration methods often struggle with scalability, governance, and real-time performance.

Azure data integration services provide a cloud-native way to connect, orchestrate, and transform data across diverse sources. Using tools like Azure Data Factory, Azure Synapse Pipelines, and Logic Apps, organizations can automate data flows, ensure consistency, and enable faster access to insights across their digital ecosystem.

What are Azure data integration services?

Azure data integration services represent a set of managed tools and frameworks in Microsoft Azure designed to unify data from multiple environments. They simplify ETL (extract, transform, load) and ELT processes, automate workflows, and support both batch and real-time scenarios.

Core Azure data integration components include:

- Azure Data Factory (ADF): The primary ETL/ELT service for building and orchestrating data pipelines.

- Azure Synapse Pipelines: Integration capabilities built into Synapse Analytics for data warehousing and analytics workloads.

- Azure Logic Apps: Workflow automation connecting data and applications through pre-built connectors.

- Azure Event Grid and Service Bus: Tools for event-driven and message-based integrations.

- Azure API Management: A secure way to expose and manage APIs for data exchange between systems.

Together, these services enable seamless movement of data between on-premises and cloud environments, supporting hybrid, multi-cloud, and SaaS integrations at scale.

Key capabilities and benefits

Azure data integration services deliver a comprehensive environment for managing data movement and transformation.

Key advantages include:

- Unified hybrid connectivity: Connect over 90 on-premises and cloud data sources, SQL Server, SAP, Salesforce, Snowflake, and others, using pre-built connectors.

- Scalable pipelines: Scale on demand with serverless compute that handles both scheduled and event-driven workloads.

- Streamlined ETL/ELT operations: Use drag-and-drop data flow interfaces or Spark-based transformations to design complex workflows without custom code.

- Centralized governance: Integrate with Microsoft Purview to ensure consistent data cataloging, lineage tracking, and compliance.

- Performance and cost efficiency: Pay only for the compute you use while optimizing runtime through pipeline monitoring and cost analysis tools in Azure Monitor.

Core components of Azure data integration

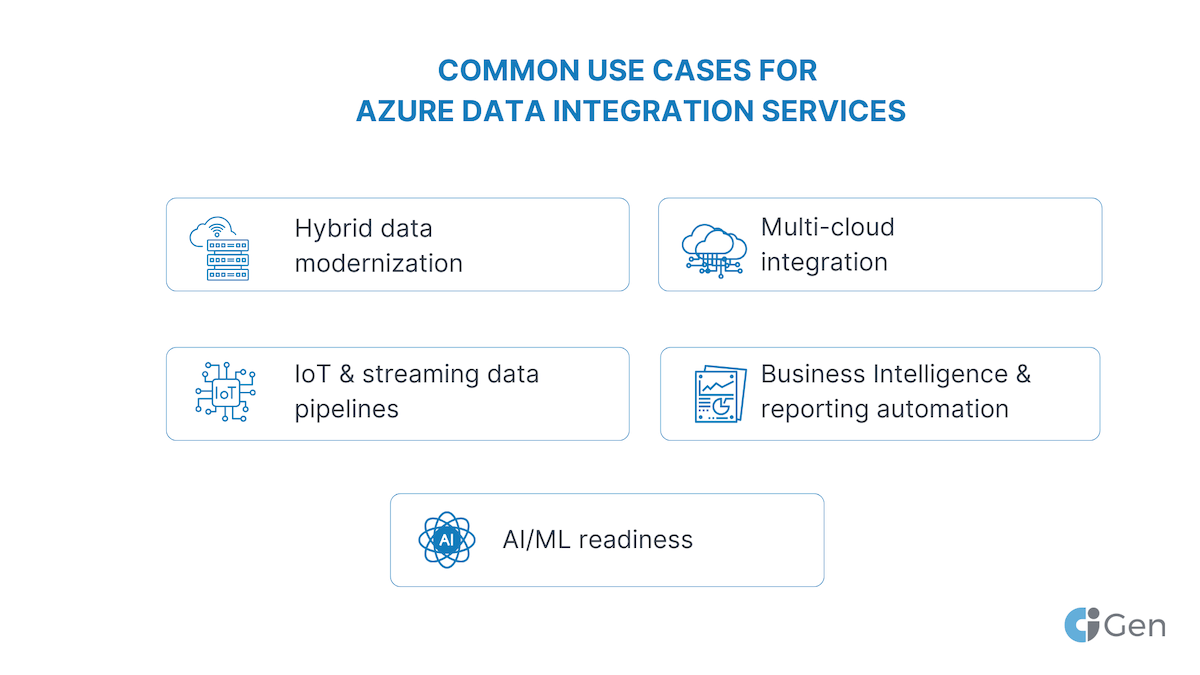

Common use cases for Azure data integration services

Integrating data effectively is about creating operational and analytical consistency across business systems. As enterprises move toward hybrid and multi-cloud architectures, the demand for unified data pipelines that can ingest, cleanse, and transform information in near real time becomes critical. Azure data integration services address this by enabling secure, governed, and scalable data flows across structured and unstructured sources.

Hybrid data modernization on Azure

Many organizations begin by modernizing legacy ETL processes. Azure Data Factory (ADF) can connect on-premises SQL Server or Oracle databases through self-hosted integration runtimes and replicate them to Azure Data Lake Storage Gen2 or Azure Synapse Analytics. This forms the foundation for cloud-based reporting, predictive analytics, and machine learning.

Example: Migrating overnight ETL jobs from SSIS to Azure Data Factory pipelines with parameterized triggers and blob storage staging, resulting in faster load times and reduced maintenance overhead.

Multi-cloud integration

In distributed enterprises, data often resides across multiple cloud providers. Azure Data Factory supports ingestion from AWS S3, Google Cloud Storage, and third-party SaaS applications through over 90+ native connectors and REST APIs. Combined with Azure Data Share or Dataflows Gen2, this allows data engineers to unify siloed datasets into a central analytical layer, ensuring consistency across the enterprise.

IoT and streaming data pipelines

For real-time analytics, ADF can be combined with Azure Stream Analytics, Event Hubs, and Event Grid to process sensor or telemetry data from connected devices. This data can then be enriched, transformed, and stored in Synapse or Cosmos DB for downstream consumption.

A common architecture involves:

Event Hubs → Stream Analytics → Data Factory → Synapse Analytics → Power BI.

BI and reporting automation

Organizations using Power BI can automate dataset refreshes through ADF pipelines or Synapse integration. Using metadata-driven control tables, teams can orchestrate scheduled refreshes, data quality checks, and incremental updates, ensuring reports reflect the latest data without manual intervention.

AI/ML readiness

Preparing clean, labeled data is one of the biggest challenges in AI model development. Azure Data Factory and Synapse simplify this through mapping data flows, allowing transformation, deduplication, and schema alignment before loading data into Azure Machine Learning (AML) or Databricks environments.

The output: reproducible, traceable datasets ready for model training, evaluation, and deployment.

In summary, Azure’s data integration capabilities span the full spectrum from legacy modernization to AI enablement. Whether handling periodic ETL or continuous event streams, Azure provides both the performance and governance required for enterprise-grade integration.

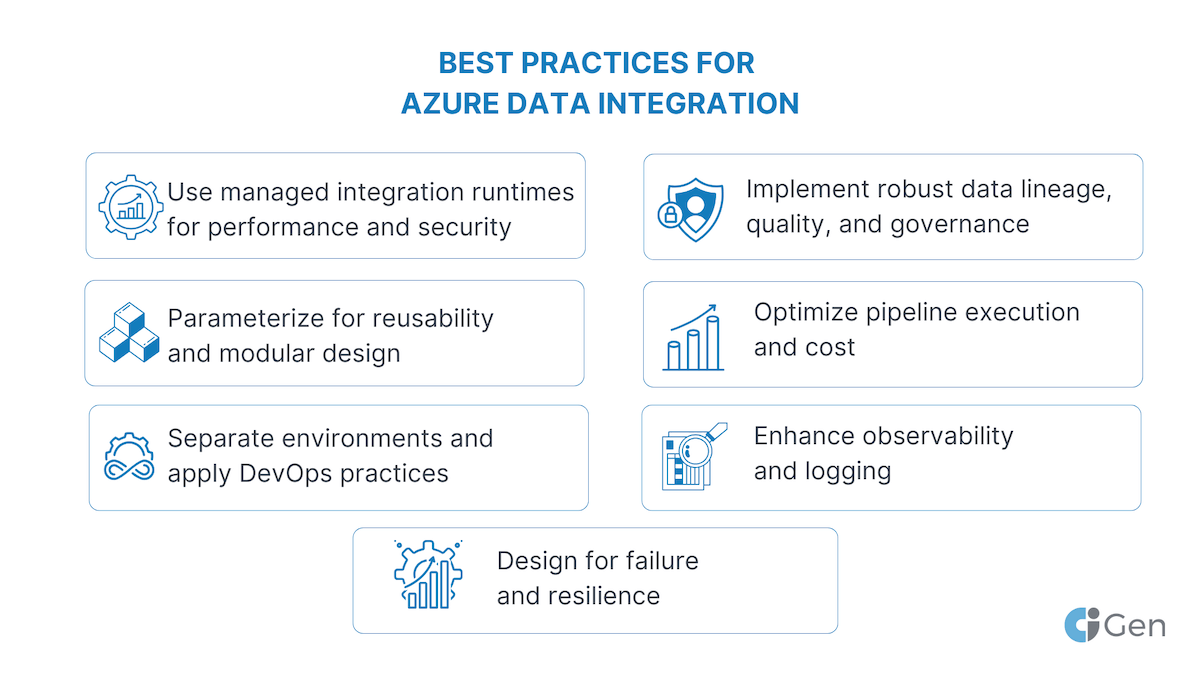

Best practices for Azure data integration

Building scalable and maintainable pipelines on Azure requires an understanding of both cloud architecture principlesand data engineering fundamentals. The following practices are drawn from field-tested implementations and align with Microsoft’s Well-Architected Framework for data workloads.

1. Use managed integration runtimes for performance and security

Azure Data Factory offers three integration runtime types: Azure, Self-Hosted, and SSIS.

- Use Azure IR for fully cloud-native data movement and transformation across Azure regions.

- Use Self-Hosted IR when accessing on-premises systems or data behind firewalls.

- For existing SSIS packages, lift-and-shift to ADF-managed SSIS IRs to preserve logic while gaining cloud scalability.

For hybrid setups, configure auto-resolve integration runtimes to minimize latency between source and target.

2. Parameterize for reusability and modular design

Avoid building static pipelines. Instead, design parameterized templates for datasets, linked services, and activities. This enables configuration-based deployment and easier maintenance.

You can use JSON templates or ADF template repository integration to standardize pipeline patterns across teams.

3. Separate environments and apply DevOps practices

Maintain distinct dev/test/prod environments, each backed by a separate Data Factory instance or Git branch.

- Integrate ADF with Azure Repos or GitHub for version control.

- Use Azure DevOps release pipelines to automate deployments via ARM or Bicep templates.

- Implement continuous integration (CI) for validation and continuous delivery (CD) for release management.

This ensures that schema changes, connection strings, and access credentials are promoted systematically with rollback options.

4. Implement robust data lineage, quality, and governance

Integrate Azure Data Factory and Synapse with Microsoft Purview for metadata scanning, lineage tracking, and policy enforcement.

Set up data validation steps within pipelines using custom scripts or Databricks notebooks to check for nulls, duplicates, or schema drift before committing transformations.

For compliance-heavy sectors (finance, healthcare), leverage Azure Key Vault to securely store credentials and connection secrets.

5. Optimize pipeline execution and cost

ADF’s pricing is based on pipeline activity and data movement.

- Group small transformations to reduce pipeline triggers.

- Use polybase loading for bulk copy operations into Synapse or SQL Database.

- Enable debug runs before production deployment to validate pipeline performance.

- Track run history in Azure Monitor and configure alerts for performance anomalies or cost spikes.

6. Enhance observability and logging

Integrate pipelines with Application Insights for structured logging, latency measurement, and root-cause analysis.

You can push activity metadata (pipeline name, execution ID, duration, data volume) into Log Analytics, then visualize it in Power BI or Grafana dashboards for proactive performance management.

7. Design for failure and resilience

Azure recommends building idempotent pipelines, workflows that can safely rerun after failure without data duplication.

Implement retry policies and checkpointing mechanisms, and use “Until” or “If Condition” activities for error branching.

For mission-critical processes, use global parameter control tables that store last-successful-run timestamps, ensuring automatic recovery after transient network or service disruptions.

To conclude, well-architected Azure data integration pipelines go beyond connecting data sources, they embody security, automation, resilience, and governance. Teams that embed these practices early in development can scale confidently without facing technical debt later.

Getting started with Azure data integration

Starting an Azure data integration journey involves balancing immediate needs with long-term scalability. While Azure offers a rich ecosystem of tools, success depends on aligning technical capabilities with business goals, ensuring that integrations deliver measurable outcomes like faster reporting, better decision-making, or cost reduction.

Step 1. Assess your current environment

Conduct a comprehensive inventory of existing data systems: databases, APIs, data warehouses, and file-based repositories.

Identify integration challenges such as schema inconsistency, latency, and access controls. Azure’s Data Migration Assistant and Purview Data Map can accelerate this assessment.

Step 2. Choose the right Azure components

Not all data workloads are equal:

- For ETL/ELT: Azure Data Factory and Synapse Pipelines

- For real-time processing: Stream Analytics, Event Hubs, and Event Grid

- For orchestration and automation: Logic Apps and Power Automate

Selecting the correct combination avoids architectural sprawl and simplifies management.

Step 3. Design a minimal viable pipeline

Start small with a proof-of-concept pipeline integrating one on-prem and one cloud data source. Define data movement frequency, mapping rules, and monitoring metrics.

Use mapping data flows for visual transformations and Data Flow Debug mode to validate logic before production.

Document dependencies between datasets and triggers, this becomes crucial as your pipelines scale.

Step 4. Automate using DevOps

Integrate ADF or Synapse with Azure DevOps CI/CD pipelines for code versioning, deployment, and testing. Store all pipeline definitions as Infrastructure-as-Code (IaC) using ARM/Bicep templates.

Automate data quality checks with pytest or Great Expectations frameworks embedded in the pipeline logic.

Step 5. Scale securely and monitor continuously

As workloads grow, configure role-based access control (RBAC) via Azure AD and isolate sensitive operations in private endpoints or VNET-integrated runtimes.

Use Application Insights dashboards to monitor latency, throughput, and error rates.

Apply tags and budgets in Azure Cost Management to track cost centers per data domain.

Getting started with Azure data integration is less about individual tools and more about building a repeatable, governed framework that connects systems securely and efficiently. By combining technical readiness (DevOps, governance, scalability) with strategic intent (business alignment, ROI tracking), organizations can turn Azure into a backbone for intelligent, data-driven operations.

Conclusion

Modern organizations depend on fast, reliable access to unified data. Azure data integration services provide the infrastructure and flexibility to achieve this, whether the goal is real-time analytics, hybrid cloud modernization, or AI-driven insights.

With tools like Azure Data Factory, Synapse, and Logic Apps, teams can reduce manual effort, improve data quality, and accelerate their time-to-insight. Supported by Azure’s built-in governance, security, and scalability, these services form the backbone of a future-ready data ecosystem.