Introduction: The convergence of AI and APIs

In 2025, Artificial Intelligence technology is a competitive advantage for many industry leaders across the board. From customer service automation to predictive analytics and intelligent workflows, AI is becoming embedded in the core of modern digital products. But turning AI from an experiment into a business driver requires more than just building models - it requires a robust software infrastructure to operationalize those models at scale.

At the heart of that infrastructure lie application programming interfaces (APIs). APIs serve as the interface between your models and the systems, applications, and users that consume them. Whether it’s exposing a fraud detection model to your finance platform, or integrating a natural language processing (NLP) model into a support chatbot, APIs are the operational backbone that enable AI to deliver real-world value.

For enterprises already using the Microsoft ecosystem, .NET is a powerful choice for building these AI-ready APIs. With its performance-focused runtime, cross-platform capabilities, and deep integration with Azure services, .NET development services enable a production-grade environment for designing scalable and secure APIs that expose AI capabilities reliably and efficiently.

This article outlines best practices, architecture tips, and deployment strategies for building AI-ready APIs in .NET, covering everything from choosing between REST and gRPC to integrating Azure-hosted models, optimizing performance, and managing A/B testing across model versions. Whether you're just beginning to operationalize your AI strategy or scaling up a mature ML pipeline, the goal is the same: to design APIs that are flexible, observable, and future-proof.

Designing scalable AI-ready APIs in .NET

Choose the right communication protocol: REST vs. GRPC

The first architectural decision when exposing AI capabilities via .NET APIs is selecting the right protocol. Both REST and gRPC are valid options, but they serve different use cases.

REST (Representational State Transfer)

- Based on HTTP/1.1 and widely supported across web, mobile, and third-party systems.

- Human-readable (JSON) payloads make it easier to debug.

- Best suited for external or client-facing APIs, such as mobile apps, web frontends, or partner integrations.

- Easy to expose via ASP.NET Core Web API with built-in support for routing, Swagger/OpenAPI, and CORS.

gRPC (Google Remote Procedure Call)

- Uses HTTP/2 and Protocol Buffers for binary serialization, enabling faster communication and smaller payloads.

- Strongly typed contracts and bi-directional streaming support.

- Ideal for internal service-to-service communication, such as when chaining microservices for data pre-processing, model inference, and post-processing.

- Supported in .NET via Grpc.AspNetCore.

Best practice:

Use REST for public-facing AI endpoints (e.g., /api/predict/sentiment) and gRPC for backend pipelines or internal APIs connecting multiple services in an AI pipeline.

Structuring your API project

An AI-ready API must be cleanly structured to separate concerns and allow easy extension. For production-grade software, consider following the Clean Architecture pattern:

- Domain layer: Defines core entities and business logic (e.g., prediction request and response contracts).

- Application layer: Contains service interfaces, use cases (e.g., model inference service), and DTO mappings.

- Infrastructure layer: Integrates external services like Azure ML endpoints or ONNX model execution engines.

- API layer (Web): Handles HTTP/gRPC requests, authentication, and routing.

Tooling tip:

Use project templates like Clean Architecture Solution Template to kickstart clean .NET API design.

API versioning strategy

AI models evolve, so should your API. It’s essential to implement versioning from the beginning, even if you only have one model version initially. This protects consumers from breaking changes and allows parallel A/B testing or model migrations.

Common versioning strategies in .NET:

- URI versioning: /api/v1/predict

- Header-based versioning: Use a custom HTTP header like X-API-Version

.NET supports versioning natively through the Microsoft.AspNetCore.Mvc.Versioning NuGet package.

Authentication and authorization

Most AI predictions are tied to sensitive data, making security non-negotiable.

Best practices for securing AI APIs:

- Use JWT (JSON Web Tokens) for stateless authentication.

- Integrate with Azure Active Directory (Azure AD) or Microsoft Entra ID for enterprise-wide identity management.

- Apply role-based access control (RBAC) to restrict access to specific endpoints or model types based on user roles.

Example: Allow marketing users access to sentiment prediction APIs, while restricting financial models to authorized analysts only.

Integrating AI and ML capabilities in .NET APIs

Now that your API architecture is in place, the next step is enabling it to deliver intelligent outputs. This means connecting your .NET endpoints to AI models, whether pre-trained via Azure Cognitive Services or custom-built using frameworks like ML.NET or Azure Machine Learning. The integration approach you choose depends on your use case, performance needs, and model hosting preferences.

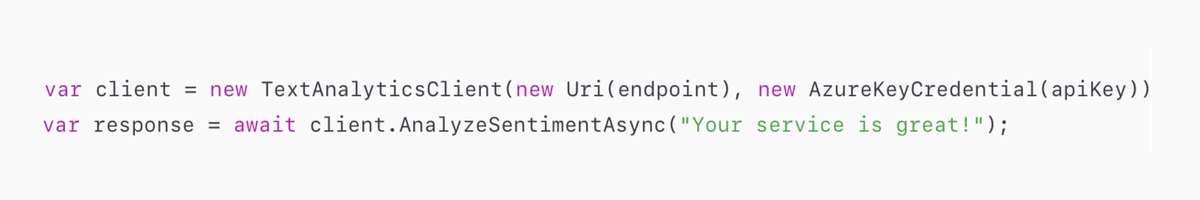

A. Connecting to Azure Cognitive Services

For many AI features like image recognition, translation, or sentiment analysis, you don’t need to build or train your own model. Azure Cognitive Services offers enterprise-grade pre-trained models via simple REST APIs.

Example use cases:

- OCR and image tagging with Computer Vision API

- Sentiment analysis or key phrase extraction via Text Analytics API

- Real-time translation using Translator Text API

How to integrate with .NET:

- Use the Azure.AI.* client libraries from NuGet for native .NET integration

- Authenticate via API keys or Managed Identities

- Call the Cognitive Service directly from your application or via a backend worker service

Best practices:

- Add retry logic and fallback responses for rate-limited or failed calls

- Monitor usage to avoid exceeding quotas (esp. under consumption plans)

- Cache frequent calls where possible (e.g., translation of common terms)

B. Serving custom ML models

Pre-built services are great for common use cases, but for domain-specific tasks, like detecting anomalies in IoT sensor data or classifying niche medical terms, you’ll need to serve custom-trained models.

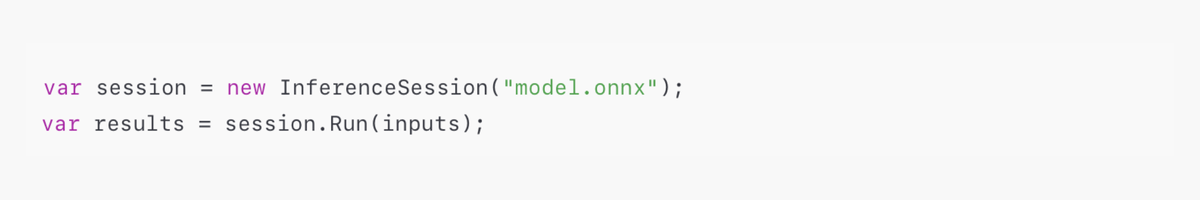

Option 1: Local inference in .NET

- ML.NET: Microsoft's native machine learning framework for .NET, ideal for structured data tasks (classification, regression, clustering).

- ONNX Runtime for .NET: Allows running models trained in Python (PyTorch, TensorFlow) within your .NET application using the ONNX format.

Advantages:

- Low-latency predictions (no external call)

- Ideal for edge use cases or high-throughput systems

Watch out for: Increased memory usage, deployment complexity for large models, and harder-to-update pipelines compared to hosted services.

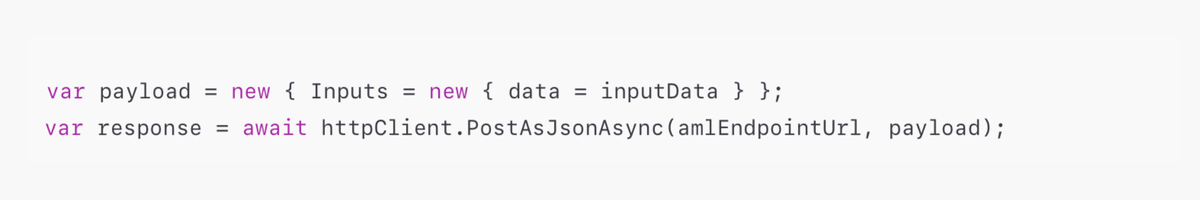

Option 2: Remote model hosting with Azure Machine Learning

If you want full control over your ML pipeline, training, versioning, deployment, Azure Machine Learning (AML) is a scalable choice.

How it works:

- Train your model using Azure ML or upload one via CLI

- Deploy to an online endpoint as a RESTful service

- Access the model from your .NET API using HttpClient

Best practices:

- Use managed identity for secure access

- Monitor the endpoint via Azure Metrics Explorer

- Use autoscaling for high-load prediction endpoints

C. Hybrid inference strategies

Sometimes, it makes sense to combine both approaches.

Scenario:

- Use local inference (ONNX) for fast, frequently accessed models

- Use remote inference (Azure ML) for heavy models or low-frequency batch predictions

- Cache high-confidence results using Redis or Azure Cache for Redis

This gives you a balance of cost, latency, and flexibility, essential in high-scale production systems.

Integrating AI models is just the beginning. To ensure these APIs deliver business value at scale, they must be observable, performant, and cost-efficient. That’s where deployment monitoring, logging, and infrastructure tuning come into play, which we’ll explore next.

Performance, observability, and cost optimization

Deploying AI-enabled APIs is not just about inference accuracy; it’s about ensuring reliability at scale. APIs that integrate machine learning models can be highly resource-intensive, sensitive to latency, and prone to unpredictable usage spikes. To avoid operational bottlenecks and ensure a seamless developer and user experience, performance monitoring and cost control must be built into the deployment lifecycle from day one.

A. Monitoring and logging

Visibility into your AI API’s behavior is essential, not only for debugging but for tracking the health and relevance of your models over time.

Core logging areas:

- Input/output payloads: Log requests and responses (with PII masking).

- Inference metadata: Log model names, versions, response time, confidence scores.

- Failures and retries: Capture failed requests, timeouts, and retry attempts.

Recommended tooling:

- Application Insights: Tracks request performance, custom events, exception telemetry.

- Serilog with structured logging for .NET apps.

- Azure Monitor Logs: Query and analyze trends across services (e.g., model latency over time).

Real-world tip:

Use correlation IDs across services (especially in microservices or gRPC setups) to trace the full lifecycle of a prediction request.

B. Performance tuning strategies

The introduction of AI models can degrade API responsiveness if not optimized correctly. Here are best practices to maintain snappy response times:

1. Response compression

Enable compression (e.g., Gzip) in your API response pipeline to reduce payload size, especially useful for image or NLP models that return large JSON outputs.

2. Asynchronous I/O

Use async/await to avoid blocking threads during calls to Azure ML endpoints or data sources. Use ConfigureAwait(false) where context capture is unnecessary.

3. Caching frequent inferences

For use cases like product categorization or entity recognition, you can cache frequent inputs and outputs using in-memory caching or Redis.

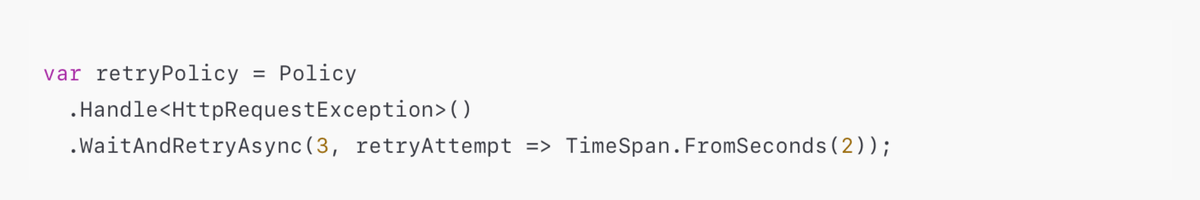

4. Resiliency with Polly

Use the Polly library to implement retry policies, timeouts, circuit breakers, and fallback mechanisms:

5. Load testing

Use k6, Apache JMeter, or Azure Load Testing to simulate production-level traffic, especially before deploying AI APIs behind customer-facing apps.

C. Cost awareness and optimization

AI models, especially those hosted via Azure ML or Cognitive Services, can quickly lead to unexpected cost spikes if left unmonitored.

Key cost levers:

- Inference volume: Most services charge per 1,000 predictions.

- Model complexity: Larger models consume more CPU/GPU per call.

- Hosting tier: Azure ML endpoints can be scaled down or paused when not in use.

- Autoscaling rules: Ensure infrastructure scales based on demand using KEDA for AKS or Azure Function consumption plans.

Cost-saving tips:

- Offload batch processing to background jobs (e.g., using Azure Functions + Service Bus).

- Use model distillation to reduce the size of deep models used in production.

- Set up budget alerts and usage caps in Azure Cost Management.

With performance and costs under control, the next frontier is maintaining model quality over time. As your AI APIs evolve, you’ll need robust strategies for managing model versions, testing new iterations, and minimizing regression risks. That’s the focus of the next section.

Managing model versioning and A/B testing in APIs

AI models, like software, are never “done.” They improve, drift, and occasionally regress. That’s why any enterprise-grade AI API needs a robust model lifecycle strategy, one that supports versioning, experimentation, and safe rollouts. This is especially critical in high-impact applications where model accuracy affects user experience, revenue, or safety.

Model versioning best practices

Without explicit version control, teams risk deploying untested models, losing reproducibility, or accidentally breaking dependent services.

Tips for clean model versioning in .NET APIs:

- Always version models explicitly, e.g., model-v1.onnx, model-v2.pkl.

- Store version metadata alongside the model: training date, dataset snapshot ID, metrics (accuracy, F1 score).

- Implement version-aware endpoints or headers, e.g.:

- REST: POST /api/v2/predict

- Header: X-Model-Version: v2

Use tools like:

- Azure ML model registry to store and tag model artifacts

- Git + DVC for full ML pipeline versioning

- Blob Storage versioning (if models are hosted manually)

Model deployment strategies

There’s no one-size-fits-all way to deploy new models. These three strategies are commonly used in production systems:

1. Blue-green deployment

- Stand up a new environment with the new model (green)

- Keep the current environment (blue) live

- Switch traffic fully to green once validated

Use case: Major model change; rollback needs to be quick and full

2. Canary deployment

- Release the new model to a small fraction (e.g. 5–10%) of real traffic

- Monitor real-world performance, then gradually increase share

- Can be implemented at API Gateway or service router level

Use case: Minimize blast radius of regression

3. Shadow deployment

- New model receives same requests as production, but responses are not returned to users

- Used for offline comparison and testing without real-world consequences

Use case: Side-by-side validation without impacting users

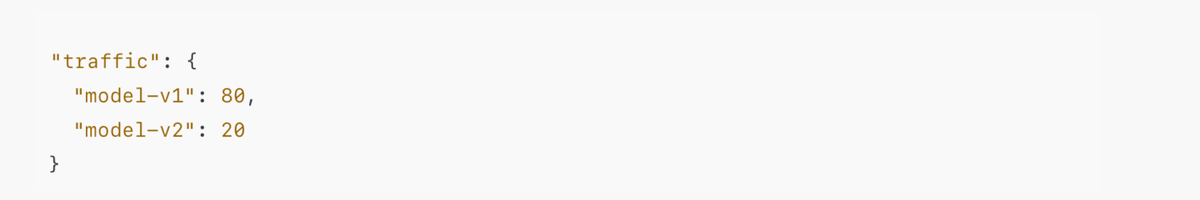

In Azure ML, you can use Endpoint Traffic Rules to distribute traffic across versions:

A/B testing and experimentation

Beyond deployments, enterprises often need to run controlled experiments to understand the effect of a model change.

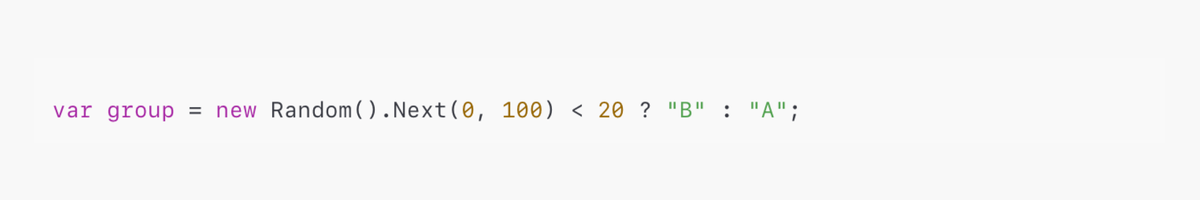

Example A/B testing flow in .NET API:

- Use middleware to randomly assign incoming users to a test group:

- Route requests to the corresponding model or endpoint.

- Log the assigned version and prediction confidence alongside any feedback, conversions, or downstream events.

- Analyze results using telemetry or BI tools to determine which model performs best.

Best practices:

- Test on relevant metrics (accuracy, latency, user conversion)

- Limit exposure during early rollout

- Avoid multivariate changes, test one thing at a time

Even with strong architectural foundations, the difference between a prototype and production-grade system often comes down to operational readiness. Let’s wrap up with a concise checklist of implementation best practices that can help CTOs and engineering leaders validate that their AI APIs are truly enterprise-ready.

Real-world implementation checklist

For technical leaders, ensuring a successful AI API deployment means aligning architecture, tooling, and operations into a repeatable, scalable delivery framework. This section serves as a practical implementation checklist, a high-level audit covering the must-have capabilities across your API pipeline, model integration, and runtime environment.

Pro tip: Conduct this audit before every new model deployment or feature integration. If you're missing two or more rows from this checklist, you're likely exposing yourself to performance or reliability risk.

Conclusion: Engineering for intelligence and impact

Designing AI-ready APIs in .NET isn't just about writing code, it’s about building resilient, scalable systems that can adapt as your AI strategy matures. From smart architectural decisions like REST vs. gRPC, to responsible monitoring, to precise version control and A/B testing, each component plays a role in bringing machine learning into real-world production environments.

By applying these best practices, CIOs and CTOs can turn experimental models into trusted, high-performing services, capable of delivering business value reliably, ethically, and efficiently.

Need support operationalizing your AI strategy?

Our .NET engineering team at CIGen specializes in building secure, AI-integrated APIs on Azure. Reach out for a free consultation and let’s talk through your use case.